AI computing divide: Scientists lack access to powerful chips for their research

Academics worldwide are struggling with insufficient computing power for AI research. Survey Shows Big Differences in Access to GPUs.

AI computing divide: Scientists lack access to powerful chips for their research

Many university scientists are frustrated by the limited computing power available to them for their research in the field of artificial intelligence (AI) is available, as a survey of academics at dozens of institutions worldwide shows.

The results 1, published on October 30 on the preprint server arXiv, suggest that academics lack access to the most advanced computing systems. This could affect your ability to large language models (LLMs) to develop and carry out other AI research projects.

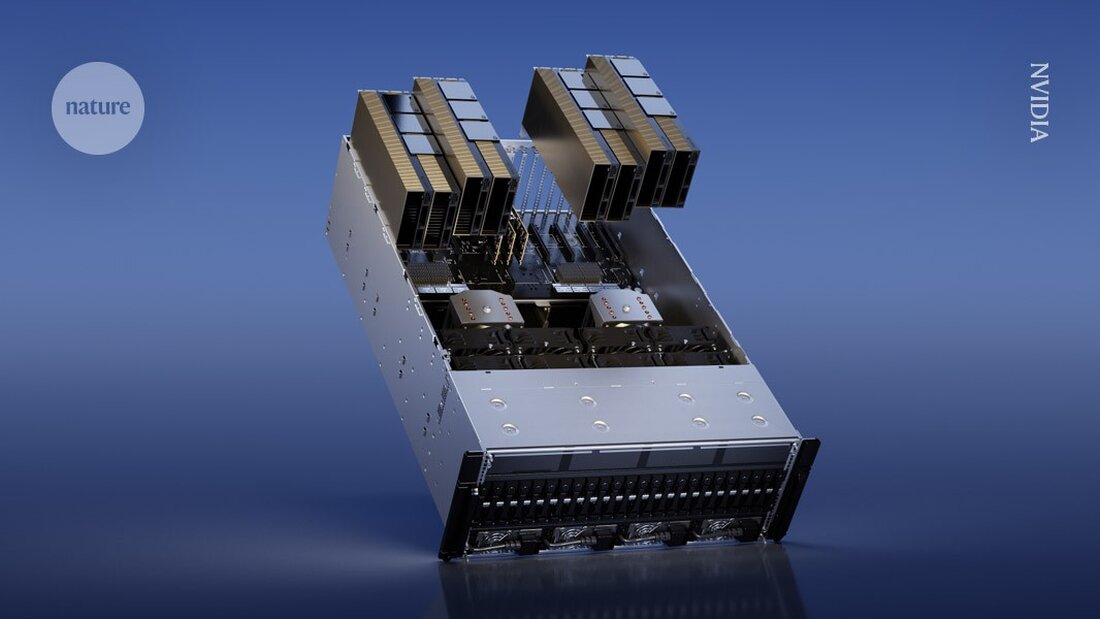

In particular, academic researchers sometimes do not have the resources to be powerful Graphics processors (GPUs) to purchase – computer chips commonly used to train AI models that can cost several thousand dollars. In contrast, researchers at large technology companies have larger budgets and can spend more on GPUs. “Each GPU adds more power,” says study co-author Apoorv Khandelwal, a computer scientist at Brown University in Providence, Rhode Island. “While these industry giants may have thousands of GPUs, academics may only have a few.”

“The gap between academic and industrial models is large, but could be much smaller,” says Stella Biderman, executive director of EleutherAI, a nonprofit AI research institute in Washington DC. Research into this inequality is “very important,” she adds.

Slow waiting times

To assess the computing resources available to academics, Khandelwal and his colleagues surveyed 50 scientists from 35 institutions. Of those surveyed, 66% rated their satisfaction with their computing power as 3 or less on a scale of 5. “They are not satisfied at all,” says Khandelwal.

Universities have different regulations for access to GPUs. Some might have a central compute cluster shared among departments and students where researchers can request GPU time. Other institutions could purchase machines that can be used directly by members of the lab.

Some scientists reported having to wait days to gain access to GPUs, noting that wait times were particularly high around project deadlines (see “Compute Resource Bottleneck”). The results also highlight global inequalities in access. For example, one respondent mentioned the difficulty of finding GPUs in the Middle East. Only 10% of respondents said they had access to NVIDIA's H100 GPUs, to have powerful chips designed for AI research.

This barrier makes the process of pre-training – feeding large data sets into LLMs – particularly challenging. “It’s so expensive that most academics don’t even consider doing science in pre-training,” says Khandelwal. He and his colleagues believe that academics offer a unique perspective in AI research and that a lack of access to computing power could limit the research field.

“It's just really important to have a healthy, competitive academic research environment for long-term growth and long-term technological development,” says co-author Ellie Pavlick, who studies computer science and linguistics at Brown University. “When you have research in industry, there are clear commercial pressures that sometimes tempt you to exploit faster and explore less.”

Efficient methods

The researchers also examined how academics could make better use of less powerful computing resources. They calculated how much time would be required to pre-train multiple LLMs using low-resource hardware – between 1 and 8 GPUs. Despite these limited resources, the researchers managed to successfully train many of the models, although it took longer and required them to use more efficient methods.

"We can actually use the GPUs we have for longer, and so we can smooth out some of the differences between what the industry has," says Khandelwal.

“It's exciting to see that you can actually train a larger model than many people would imagine, even with limited computing resources,” says Ji-Ung Lee, who studies neuroexplicit models at Saarland University in Saarbrücken, Germany. He adds that future work could look at the experiences of industrial researchers at small companies who also struggle with access to computing resources. “It's not like everyone who has access to unlimited computing power actually gets it,” he says.

-

Khandelwal, A. et al. Preprint at arXiv https://doi.org/10.48550/arXiv.2410.23261 (2024).

Suche

Suche

Mein Konto

Mein Konto