Researchers use techniques from astronomy to create computer-generated “Deepfake” images to recognize - which at first glance may look identical to real photos.

By analyzing images of faces typically used to study distant galaxies, astronomers can measure how a person's eyes reflect light, which can indicate signs of image manipulation.

“It’s not a panacea as we have false positives and false negatives,” says Kevin Pimbblet, director of the Center for Data Science, Artificial Intelligence and Modeling at the University of Hull, UK. He presented the research at the Royal Astronomical Society's National Astronomy Meeting on July 15. "But this research offers a potential method, an important step forward, to potentially add to the tests that can be used to find out whether an image is real or fake."

Expressed photos

Advances in artificial intelligence (AI) are making it increasingly difficult to tell the difference between real images, videos and audio those created by algorithms, to recognize. Deepfakes replace features of one person or environment with others and can make it appear as if individuals have said or done things that they did not. Authorities warn that this technology is militarizing and contributing to the spread of misinformation, for example during elections, can be used.

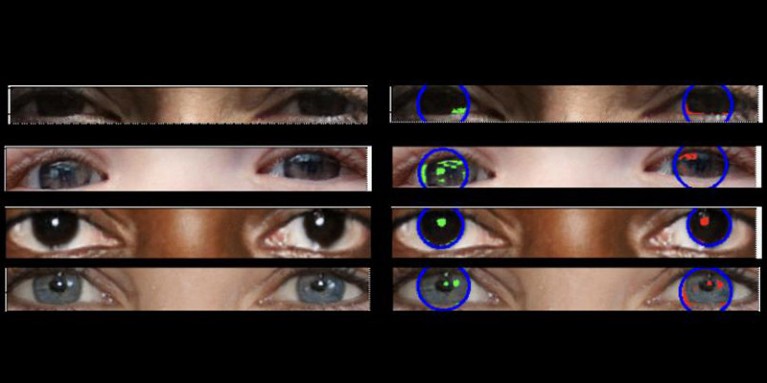

Real photos should have "consistent physics," Pimbblet explains, "so the reflections you see in the left eyeball should be very similar, although not necessarily identical, to the reflections in the right eyeball." The differences are subtle, so the researchers turned to techniques developed for analyzing light in astronomical images.

The work, which has not yet been published, formed the basis for Adejumoke Owolabi's master's thesis. Owolabi, a data scientist at the University of Hull, UK, sourced real images from the Flickr Faces HQ Dataset and created fake faces using an image generator. Owolabi then analyzed the reflections from light sources in the eyes in the images using two astronomical measurements: the CAS system and the Gini index. The CAS system quantifies the concentration, asymmetry and smoothness of an object's light distribution. This technique has allowed astronomers, including Pimbblet, to characterize the light of extragalactic stars for decades. The Gini index measures the inequality of light distribution in images of galaxies.

By comparing the reflections in a person's eyeballs, Owolabi was able to correctly predict whether the image was fake about 70% of the time. Ultimately, the researchers found that the Gini index was better than the CAS system at predicting whether an image had been manipulated.

Brant Robertson, an astrophysicist at the University of California, Santa Cruz, welcomes the research. “However, if you can calculate a value that quantifies how realistic a deepfake image may appear, you can also train the AI model to produce even better deepfakes by optimizing that value,” he warns.

Zhiwu Huang, an AI researcher at the University of Southampton, UK, says his own research has not identified any inconsistent light patterns in the eyes of deepfake images. But "while the specific technique of using inconsistent reflections in the eyeballs may not be widely applicable, such techniques could be useful for analyzing subtle anomalies in lighting, shadows and reflections in different parts of an image," he says. “Detecting inconsistencies in the physical properties of light could complement existing methods and improve the overall accuracy of deepfake detection.”

Suche

Suche

Mein Konto

Mein Konto